Here is my latest patchwork – definitely patch but not work! Finished late in the night!

What shall I do with it? Hang it on a wall or in the bin. I shall wait a little and find out what people think. I learnt a lot during this job – use thicker material, make two frames and above all I need to learn to be patient.

My wonderful GSP was found in the streets of Ireland and given to us by the local dog rescue. We did not know anything about this breed but soon had to learn. He is a hunter and nothing seems to break his focus when seeing, smelling a prey.

This morning we were walking in the local fields when he smelled/heard a pheasant – alert. Josette – hang on to the lead. Then the stupid bird decided to sing and then to fly off – high alert. Heels digging the ground, sweat pourring out, I managed to stop him (the dog) flying off. Everything calm down again so we continued our walk. In the next field we met two deers. I started to shake with anxiety. No problem, he did not see them. What a hunter! No wonder he was left wandering the streets of Ireland.

Four weeks ago, I took Dylan for a long walk in the fields as we do every morning. Joy! We usually meet lots of wildlife: partridges, pheasants, red kites, squirrels, rabbits and two types of deers – the little muntjacs and the beautiful roe deers.

Dylan and I enjoying the fresh air, the changing of colours and dreaming of sunny Autumn days – I think called Indian Summer. Dylan ferreting for a field mouse – never to be caught.

In general people do not understand my dog. When walking on a lead and he sees a squirrel – there’s a big battle of wills, tightening of the muscles, digging the heels in the ground etc. (that applies to both of us!). And then somebody will say “Don’t worry, he will never catch it”. I know that, but what that person forgot or did not notice is that I held the other end of the lead and I do not fancy running around a tree or being pulled in a bush. Oh the joys of having a hunting dog.

Deers waiting patiently for us to get out of the way

…and sometimes patience runs out and so do the deers. I would love to show you a video of them running away with their little white tail bobbing up and down in the long grass, but that will be for another day when I have mastered the art of displaying videos in WordPress.

Unfortunately, instead of the dreamt-of Indian Summer, we got rain, and rain, and more rain, which made the paths very slippery. And, you guessed it, I fell down about a mile (1.5 km) from home. I heard a little pop and thought, here we go, a sprained ankle! So I picked myself up and started to hobble back home, through the fields and woods, and, for once, Dylan was superb – he did not pull – achievement! My husband drove the car to the entrance of the woods and home we went.

Next day we went to the hospital and were told that I had broken my ankle. Since then I have been wearing that awful black boot – day and night.

I will not be dancing this Christmas but I hope that in a couple of months Dylan and I can resume our walks

Unfortunately my very old companion died exactly a year ago. After all the tears and heartbreak, we had to decide – should we have another dog? Of course we should so at the begining of the year we started visiting rescue centres.

blah blah blah blah blah blah blah blah blah blah blah blah

Terance, as he was called, is 3 to 4 years old, abandonned somewhere in Ireland and then moved to England to look for his forever home.

The poor miserable little doggy. He looked so lonely and so much in need of love and tender care.

That was it! We had to take him home.

blah blah blah blah blah blah

We made it home

The first thing to do was to change his name. We could not cope with Terance – he is now Dylan. We then discovered what type of dog he is. Dylan is a German shorthaired pointer (GSP). Might not be the best breed for old people but what can we do? It is too late we love him.

German shorthaired pointers are hunting dogs – they need a lot of walks and playing times but they are also goofy which make them so funny. They need a lot of love and attention as well.

Life with a GSP

Going for a walk brings its problems. Dylan is a very strong dog that pulls me all over the place – left, right, forward even backwards. The toughest part is when Dylan meets a squirrel, a rabbit or a deer. We had a Fenton episode last week when the lead broke. Unfortunately I was too worried to film it as a road was nearby. All finished well as my big boy came back to me and no, he did not injure or kill the deer – I think he got scared.

blah blah blah blah blah blah blah blah blah blah blah blah

A few months ago, we bought him a toy which include a little tube full of treats. All what he needs to do is push the tube whith his nose and the treats will fall down. Not if your name is Dylan. He cannot work it out.

Another trait of these little guys is their love of water. Dylan’s paws are nicely webbed but you guessed it – Dylan is scared of water!

This breed is supposed to be highly intelligent – I have my doubts. I suppose intelligence manifests itself in different ways.

Isn’t he beautiful looking!

I am tired

There is a fly on the ceiling

I love banana

At the beginning of June the whole family took me to visit Cordoba, one of my to do list before I snuff it. What can you do and see in Cordoba?

La Mezquita-catedral –

From our Airbnb, We just need to cross the road and we are in front of one of the entrances.

We made it, we are in the Mezquita garden/yard covered in orange trees but no oranges.

This wonderful building was first a Roman temple and then was converted into a church by invading Visigoths in 572. Spain was conquered in 711 by the Moors and the building was then demolished and the grand mosque of Cordoba was erected on its ground cerca 784. The most famous feature of the mosque is its red and white arches – over 800 over them.

The mirhab or prayer niche is one of the magnificent and colourful features of the mosque – dark blues, reddish browns, yellows, and golds that form intricate calligraphic that adorn the horseshoe arch.

Mihrab is a semicircular niche in the wall of a mosque that indicates the qibla; that is, the direction of the Kaaba in Mecca and hence the direction that Muslims should face when praying. (Unfortunately, I am rather short and there was many people, so my photo leaves a lot to desire.)

Another horseshoe arch showing the magnificent colours.

The Christians reconquered Spain in the 13th century and converted the mosque back into a catholic church. By the 16th century, in the middle of the arches, the cathedral was built – renaissance style with the most beautiful Italian style dome.

When exiting the “arches”, you get back to the large garden of orange trees but still no oranges. and nobody there that could tell me the reason of the absence of oranges.

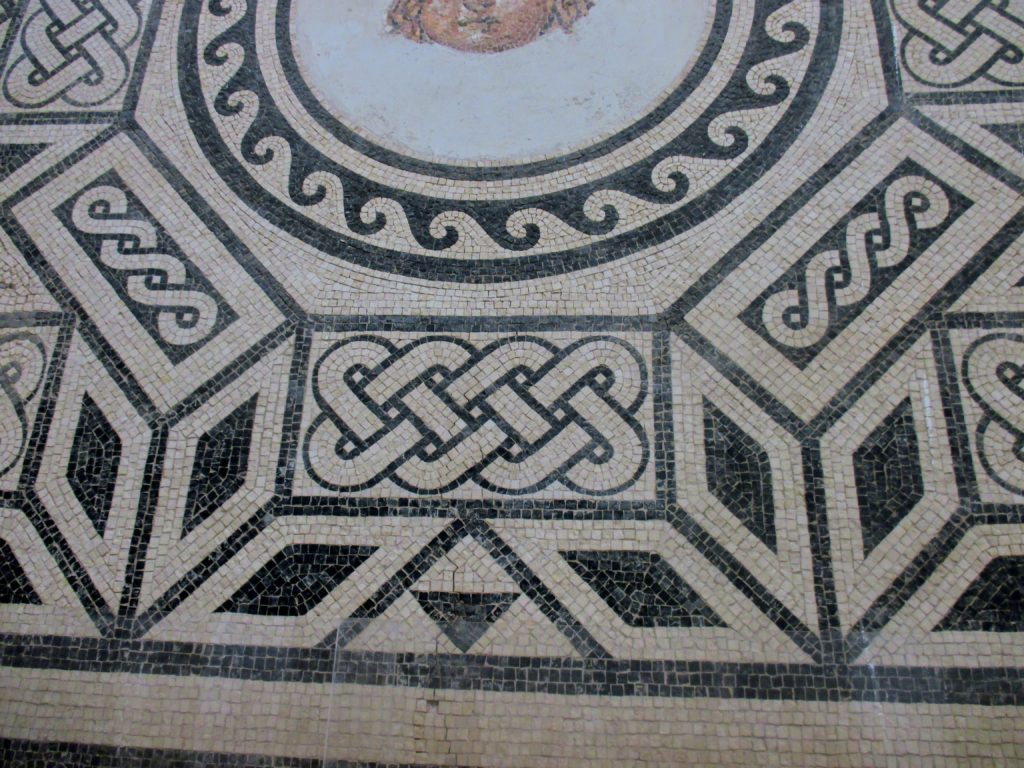

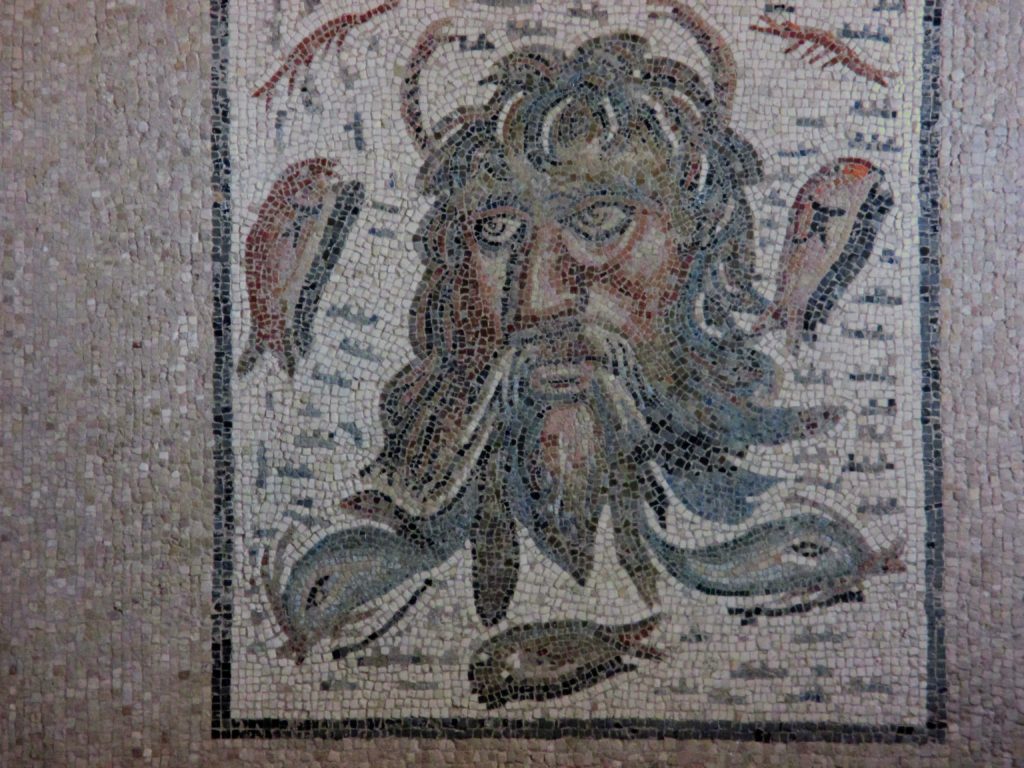

The Alcazar of the christian monarchs: History repeats itself. The site was first built by the Romans, then the Visigoths, then the Muslims to end up with the Christians. The future was not so bright as it became the seat of the Court of the Holy Inquisition (rooms were turned out into cells etc.). Its final role was to be the city’s public prison until 1931 when it was declared a Historic-artistic Monument and renovation was due. Now it has a display of roman mosaics – Below you can see some of the wonderful geometric and pictorial art on mosaic

There are no old buildings without a tower and this one applied the rule – so up we went on a narrow staircase with well worn steps. It was worth it, the view was fantastic – horse riding school, baths and garden.

equedestrian school

baths

gardens

There are many more beautiful buildings, streets covered in flowers etc. to see including great dinners to attend and of course enjoy some flamenco.

A year and a bit later…

That was last year when we planned to go to Granada in 2020. Unfortunately 2020 is a little different, no point in crying, there is always 2021+.

Keep safe!

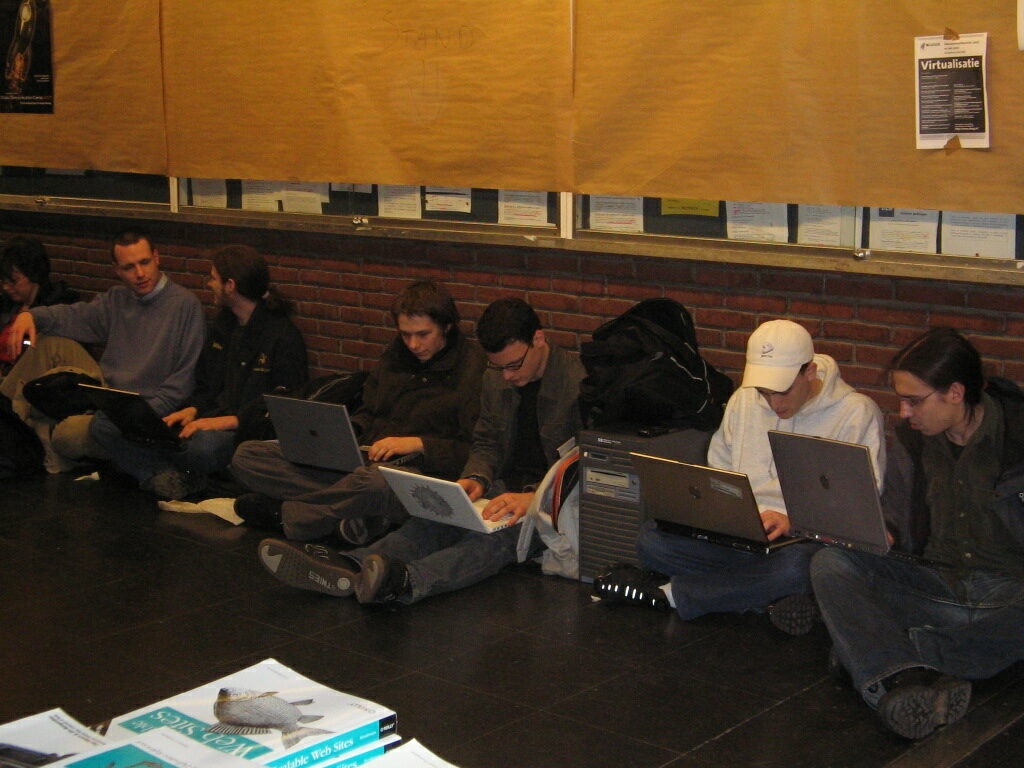

FOSDEM 2020, Brussels 1/2 February

Has it really been 19 years since I attended my first FOSDEM? Really this was the first FOSDEM as the first edition was called OSDEM. In 2000 I received a phone call from Raphael Bauduin: is O’Reilly not interested in attending FOSDEM? Didn’t you read my email? Oops! That was the beginning of a 13 year relationship.

For the first few FOSDEMs I had to carry chairs and tables – and God knows those tables were heavy. That happened on Friday evening before the meeting at the Roy d’Espagne. Saturday morning, up early to set up the O’Reilly table. Never had time to make a great display as the selling started as soon as I opened the first box of books. Going to lunch – no time. Going to the bathroom – difficult. The organisers were so kind: they always gave me a lot of coffee and food, and when it was not the organizers it was the attendees. Sunday evening was the next challenge – on one’s knees to pack the books, clean the floor etc. I have some great pictures of the professional broom, which was about 60cm wide. My biggest worry was whether UPS would come and pick up the few boxes of books left. I usually sent between 50 and 60 boxes and returned a maximum of 10. At the end the place was clean and the university could resume its duties on Monday morning.

A good weekend, the table is 99% empty. I must have selected the right books!

I met a lot of friends during those crazy weekends, often having dinner with the Perl guys on Saturday night, eating a lot of ribs somewhere else…. I must admit that the trip back to the UK was very quiet – nobody talked. We were happy but dead.

Can you go to FOSDEM without meeting Richard Stallman (not my preferred person but I am sure he did a lot of good for Open Source)? He was there for almost every conference. I also remember taking Tim O’Reilly one year who managed to break his arm on the Friday evening, so we spent most of the night at the local hospital. No fun!

I also met lots of O’Reilly authors who came just for the conference or to give a talk: Rasmus Lerdorf, Philip Hazel, D J Adams, Miguel de Icaza, Dave Cross, Brian King, Peter Hintjens and many, many more.

I also learnt some Belgian French – nonante, septante etc. What I liked best: the feeling of community, the growth, the oomph, “we’re all in this together” – german, french, italian, british, bulgarian, spanish, greek scandinavian and many more – you name it they were there. What did I not like so much: the weather, either rainy or snowy but always cold.

My biggest regret: I NEVER SAW THE FOSDEM DANCE AT THE END OF THE SHOW.

In 2008, I was invited to attend the Guadec conference at the Bahçeşehir University, Istanbul. Of course I arranged for a cheap flight and cheap hotel as per usual. I soon learnt that EasyJet lands in the Asian side of Istanbul – Sabiha Gökçen International Airport which is rather a long way to central Istanbul. Somehow I got there with the help of many minibus drivers. Got to my hotel. The reception area was very Middle Eastern with lots of colourful cushions, low lights and lots of little vases, candle sticks etc. Then I was taken to my room. At the first glance it was OK, the bed looked clean… I should have checked the bathroom.

After dropping my luggage I decided to walk around the area – I found out that I was staying only a few meters away from the Blue Mosque, and Hija Sofia etc. Wonderful! So I walked around trying not to get lost. I had diner and promised myself to go back before I leave the country. So back to the hotel I went. Got to my room and inspected the bathroom. Toilet looked a little funny with a jet in the middle of the back – that I found out later did not work – an experience I will no have on this occasion. Had a shower, that was OK but then I looked at the electrical wiring of the bathroom. DANGER! Do not switch on the light. The hairdryer wires were nude and hooked onto the wires of the light switch – too many nude wires for me in a wet area. I don’t think it would have passed the UK health and safety standards! Never mind, I was staying near the Blue Mosque! Next day I took a taxi to the university. The university looks great, The front garden goes down to the Bosphorus, seats and tables everywhere for the students to enjoy the magnificent view and the sunshine. I do not believe that it is a cheap university!

Next problem… no books.

As 99% of my dealings are with EU countries, I had forgotten that I must pay an import tax to get the books out of customs. After talks with the Librarian and the Head of Computing, we agreed that I would pay them with books. By lunchtime I was set. The table looked perfect and I was ready to sell lots of books which I did.

No conference dinner was arranged but instead we got a fantastic cruise on the sea. Wonderful evening with great sites. On leaving the boat, I looked for a taxi which I found very easily. I gave the driver the card of my hotel and off we went. As far as I could see we were driving towards the city centre but unfortunately I could not recognise anything as it was in the middle of the night. After driving for what seemed like hours, I somehow managed to make the driver understand that I wanted to stop in front of a big hotel. I got out of the taxi followed by the driver. With the help of the porter who spoke English, we managed to convince the driver to go away. Then another taxi was booked which took me to my hotel within minutes. What a night! The next day when I reported the incident to the conference organizers they told me that I should be aware that not all taxi drivers can read.

Did I enjoy my short stay. Yes, wonderful, I would go back anytime.

In 2008 I was invited to a Unix conference in Athens. From the airport I took a taxi to my hotel at a cost of 30 euros (this will be important later!). After checking in at the hotel I decided to take a taxi to the university. I arrived at my destination without a problem, but I still can’t understand why the taxi stopped and picked up a woman, then deposited her a few miles away before continuing the route to the university – no explanation was offered. Talking to my friends, I was told that I was charged the right amount for the trip. Very strange – I’ve spent many a sleepless night pondering that mysterious journey!

The conference was great, the audience very friendly. I was able to visit some of the old sites, as you can see from the pictures.

But all good things come to an end, and it was time to leave for Bucharest for the eLiberatica conference. I had to be at the airport at 6 am so I asked the hotel reception to book a taxi for pick up at 5 am. The longer my taxi took to arrive, the more stressed I felt. At long last it came. The driver was a middle-aged man with no striking features and his English was pretty good. He first told me not to worry – we would get to the airport on time. We may have made it on time but without a worry was another matter. As soon as the car started he lit a cigarette and had a swig of coffee, driving with one hand on a very busy road. Then his mobile rang! Still driving like a lunatic, he answered the phone then dialled a number and talked for a few minutes. During the journey he made at least 3 phone calls, drank coffee and water, and smoked three or four cigarettes. At long, long, LONG last we made it to the airport, the driver perfectly content and me rather green – I was shocked that we made it to the airport in one piece and grumpy. But it wasn’t over yet…

I retrieved my suitcase, ready to pay. “How much do I owe you?” “60 euros,” I was told. Since I’d only paid 30 euros on the way in, I couldn’t understand the 100% increase. I offered 30. He then asked if my company was paying. I said, “Yes, of course,” which was followed by the best response I could have imagined! With a big smile he just said, “60 euros – a little for you, a little for me”. In a bad temper, still nauseous and imagining my plane leaving without me – in short, in no mood to argue – I threw 30 euros at him and rushed inside the building. Nobody was going to spoil my visit to Romania.

As discussed previously, I was now in Sofia. After the traumatic tooth experience, I was finally ready to meet the organizer of the WebTech Conference. Once technical details had been discussed – had my books arrived, when could I set up the display table, etc., Bogomil Shopov took me to the centre of Sofia for sightseeing before saying, “We’ll meet here around 4 pm, then we can walk to the venue to set up for tomorrow” – then departed. So I was left alone in this new city. I was excited and ready to take millions of pictures (please note – I did not say I was good at photography). For example:

St Alexander Newski Cathedral: This newish cathedral is in the Neo-Byzantine style. It was created to honour the Russian soldiers who died during the Russo-Turkish War of 1877 1878, as a result of which Bulgaria was liberated from Ottoman rule. I discovered later that the basement was a museum for the oldest and most beautiful icons – not the cheap stuff you find on street corners.

The Russian Church (Church of St Nicholas the Miracle-

The Church of St George, an early Christian red brick rotunda with enormous walls of 1.4 metre thick. Considered the oldest building in Sofia, it is situated among the remains of the ancient town of Serdica. Originally a Roman bath or serving a Roman religious ceremonial function, the rotunda kept changing its role – Christian temple, mosque and now a tourist site with some Christian links.

Sofia is not just about churches (which, by the way, did not manage to make me religious), there are also some other interesting sights such as:

The Communist Party House: I think most USSR countries had such a building. In Sofia, it was used until August 1990 as the seat of the Central Committee of the Communist Party. It was set on fire during the Summer of 1990 by crowds protesting against the Soviet rule. On the outside only one thing is missing… the red star! It was removed after the collapse of the communist party and the newly acquired freedom of the people of Bulgaria. It is now home to the Museum of Socialist Art.

The Statue of Sveta Sofia (Saint Sophia): Erected in 2000 and standing in the plot once occupied by Lenin’s statue, Sophia stands on a 48 feet high pedestal. The 24 feet high statue is adorned with the symbols of power, fame and wisdom – the crown, the wreath and the owl. Allegedly Sophia was considered too erotic and pagan to be referred to as a saint. I wish I knew more about this fascinating lady.

As you can see from this very short post, Sofia is a very interesting city to visit with lots of history and culture. There is a lot more to see but for the rest you will have to go and see for yourselves. Personally I have been going almost every year and every time I discover something new and enriching.

Going back to my story – I had to go back to meet with Bogomil. That’s when I realised that the street signs were written in Cyrillic and my map was showing Latin script. Ouch! Panic! How do I get back? Fortunately, I had a pocket book showing the Cyrillic alphabet vs. Latin and somehow, translating letter by letter, I managed to get back to the meeting place. Since then both writings appear on the street corner so I suppose I was not the only foreigner that got caught.

At the end of my last post, I was about to fly to Sofia for my first conference in Bulgaria, WebTech 2006. The flight was perfect – as we landed, the passengers applauded the pilot. Nice! I have seen this gratitude only once before on a flight to New York on an Air India flight. I loved the old airport with its 2 luggage belts. It was so different from all the places I have been. Unfortunately this airport is now only used for domestic flights and I never had the occasion to use it again. You may remember me mentioning that I had previously broken a tooth just before flying out. Once I got to the hotel, I realised that I had to have that tooth repaired before the conference as it was cutting the inside of my cheek and talking became painful (peace at last for the techies!). There was no way I could attend the conference without a visit to the dentist. So I asked the receptionist if she could help me out. Very kindly she told me that she would try to arrange an appointment for the morning.

Morning arrived: I spoke to the receptionist and was told that she and the hotel driver would take me to the dentist. I was rather surprised as I never thought I would need an escort. So, in the car we went. I realised what a privileged area the hotel was in, as we were now driving on streets with lots of potholes and weeds growing everywhere. We passed big, ugly concrete blocks of flats and I seriously started to wonder what was going to happen to my mouth. The car stopped in front of the most rundown block. Great. By then I was a little edgy but never mind – repairs had to be done. We entered a very dark hall with a light that did not work (or was it a naked bulb? I cannot remember). I went into the dentist’s office which was very bright, the equipment reminded me of my first visit to the dentist when I was a child (yes, I know, a long time ago): the equipment was very clean-looking but very old. I began to take very deep breaths. What was going to happen to me? Would I be able to talk at the conference? Would I even be able to talk ever again?!

… when the young, friendly dentist began speaking English to me, I was almost disappointed. All that stress for nothing! He did a fantastic job, and eleven years later, the tooth is still there as good as new. I still do not know why I was escorted by two people but I can only be thankful to them. I could go to the conference and be my normal(?) self.

This photo could have been taken from the car on my way to the dentist. Thankfully these communist-era blocks are being modernised or replaced entirely. One should not forget that Bulgaria was part of the USSR until December 1989 when everything was ruled by Moscow, and most of the country was depleted of its riches. Bulgaria has done extremely well since its independence. Every time I go there I am surprised by the new look, the better way of life the people have achieved.

To be continued