National Hack the Government is an annual HackCamp run by Rewired State. As well as bringing great people together to have fun hacking and building things, we hope to improve transparency, open data and produce demo-able ideas that can be followed up and put into practice in real life. This is done by holding a competitive event for creating prototypes and building ingenious (and occasionally tongue-in-cheek) projects that help improve local and national services and make use of open data.

National Hack the Government is an annual HackCamp run by Rewired State. As well as bringing great people together to have fun hacking and building things, we hope to improve transparency, open data and produce demo-able ideas that can be followed up and put into practice in real life. This is done by holding a competitive event for creating prototypes and building ingenious (and occasionally tongue-in-cheek) projects that help improve local and national services and make use of open data.

Now in its 6th year, an even bigger event has been put together to host local communities hacking around local data, issues and problems bringing in the National part in the title to life. A bar-camp has also been added on the Saturday to help share information and insight – to organically define some of the challenges that will be hacked on. This explains the title “Hack-Camp”!

The event will be followed up by a show-and-tell of the finalists’ work – taking the winners from each centre and allowing them to demo their ideas to the government, businesses and the wider community.

National Hack the Government is sponsored by:

FutureGov is excited to be involved with National Hack the Government by hosting local communities’ challenges on Simpl Challenges, including Glasgow, Exeter, Leeds and Bournemouth.

Simpl Challenges is our innovation platform that connects local public services and organisations with innovators and ideas. Gathering ideas before the event on Simpl will give more time for learning, talking and listening to each other, and building some fantastic prototypes on the day itself.

Follow the links below to find out more and submit your ideas for your local event, or you can take part remotely by submitting your ideas to the UK section:

- UK: What are your ideas to code a better country?

- Glasgow: What is on your agenda for improving public services in Glasgow?

- Exeter: How can you use local data to hack Highways and Healthy Communities?

- Leeds: How can you visualise the city either for citizens, business, or both?

- Bournemouth: How can data improve the lives of people in Bournemouth?

You can also comment on the ideas submitted, so that the idea owners can get valuable feedback on their projects before the event has even started.

Rewired State creates bespoke hack events that bring creative developers, designers and industry experts together to solve real world problems – promoting and supporting more than 1 200 of the UK’s most talented and inventive software developers and designers, as well as nurturing 1 500 promising world-wide developers under 18 through the Young Rewired State network.

The 9th Annual PHP UK conference is over! Lots of people, a very posh venue, great food and very efficient and friendly organizers: Johanna Cherry, Sam Bell and Ciarán Rooney. What else do you need at the London PHP conference? Possibly some talks! I am told they were very good.

The 9th Annual PHP UK conference is over! Lots of people, a very posh venue, great food and very efficient and friendly organizers: Johanna Cherry, Sam Bell and Ciarán Rooney. What else do you need at the London PHP conference? Possibly some talks! I am told they were very good.

The conference was divided into 3 tracks, 27 world-wide known speakers and of course some beer events at the local pubs and Friday evening gathering in the exhibition hall.

For key notes we had:

- What Makes Technology Work by Juozas ‘Joe’ Kaziukenas – compared making wooden chairs to building apps

- The Future of PHP is in the Cloud by Glen Campbell – reviewed some of the key developments in PHP over the last few years and outlined how PHP can keep pace with the explosive growth of the cloud.

Some interesting talks included :

- PHP in Space by Derick Rethans – how PHP can be used for all kinds of terrestrial and non-terrestrial purposes … Expect trigonometry and other maths, and rocket science/explosions! It feels that it should have been great fun to attend this talk.

- Debugging HTTP by Lorna Mitchell – Curl, Wireshark and Charles, the tools you will want to have at hand. Most of the comments are “great talk, will be using Charles…”. I have a very special relationship with Lorna as she helps me marketing her book PHP Web Services to her audience. Well done Lorna!

- PHP at the Firehose Scale by Stuart Herbert – Here’s one to give the PHP bashers a well-deserved black eye! Twitter is one of the world’s best know social media sites, handling over 500 million public tweets a day (that’s around 6,000 tweets a second). How do they do it? With the help of PHP of course.

Other activities:

- If suffering from an information overdose and wanted to relax, you could then go to THE CLOUD BAR (Presented by Engine Yard) – for an awesome chill out space, free swag, free coffee, and free info about everything cloud.

- Or if feeling full of energy, you could join the HACKATHON (Presented by JetBrains) – alongside the main tracks and late into Friday night using Sochi Winter Olympics API data. Prizes were awarded throughout the Hackathon for the most innovative hacks including two free tickets to next year’s conference, PHP Storm licenses and more.

Make a note in your diary for the 10th London PHP Conference which I am sure will be even more awesome.

The two-day long biggest Open Source meeting in Europe is over! As you know, FOSDEM is a free event that offers open source communities a place to meet, share ideas and collaborate. It is renowned for being highly developer-oriented and brings together 5000+ geeks from all over the world.

The two-day long biggest Open Source meeting in Europe is over! As you know, FOSDEM is a free event that offers open source communities a place to meet, share ideas and collaborate. It is renowned for being highly developer-oriented and brings together 5000+ geeks from all over the world.

O’Reilly has sponsored FOSDEM since 2002 when it changed its name from OSDEM to FOSDEM. So since 2002, I have been attending FOSDEM – not attending the talks but selling numerous O’Reilly books with my colleagues. Our table(s) is still in the H Block but the location has improved, we are now a few metres away from the doors which means it is no longer so cold. Even though we are very well looked after by the members of the organisation and by the delegates, providing us with tea and coffee, we still cannot have lunch until 4 pm as we are so busy. Please note: this is not a complaint just a fact. Thank you guys for bringing us food and drinks.

For the last couple of years, I started the weekend by going to the Delirium to meet some friends. The Delirium is a huge pub, two minutes from the Grand Place. I believe most of the drinks are sponsored so you can imagine the amount of people going there – it is not big enough to cater for everybody so lots of people are drinking, talking, greeting each other in the street. After an hour or less and a long wait for a coke, I had to leave as I was already thinking of getting up early to set up for the next day. This year we were incredibly lucky. Our 51 boxes of books were at the other end of the hall – not good. Our luck changed with the arrival of 3 guys coming from South Germany – unfortunately I do not know their names nor even the town they came from. At around 7 am on Saturday morning they came in the hall and said something like “it is cold outside and it is raining, can we stay in?” “But of course, no problem,” was the answer, “but you might have to help us with these boxes.” And those 3 great guys put the boxes on a very dilapidated trolley (several trips), brought the boxes and opened them for us. To me these three gentlemen epitomise FOSDEM – helping each other. For some of you FOSDEM is the meeting of great open source minds, for me it is the meeting of friends – some I see only once a year, some more often but always very happy to see each other. There I learn about your achievements, your dreams (one moved to Facebook in San Francisco, another one had a baby and so on). One man very proudly showed me one of the first O’Reilly/FOSDEM bags that we created years ago. With a lot of pride and very carefully, he got the bag out of his pocket and filled it with new books.

I will not bore you with a description of the content of FOSDEM, you can see that on Philip’s (Fosdem.org) video below.

Saturday evening we had dinner with the Perl mongers – again a very multinational gathering. As the dinner was for 50 people, I will only mention a few – Liz and Wendy, the famous Dutch duo who always sponsor these dinners; Ovid Poe, O’Reilly author; Laurent Boivins and Marc Chantreux, Perl France; Sawyer X who I hope will be interviewed soon about Dancer and published on this blog; Marian Marinov, from Sofia, who is organizing YAPC::Europe 2014 (22nd-24th August). I think I only talked about YAPC and how to make it an even greater conference.

Two of our authors came to see us:

Pieter Hintjens, author of Zero MQ

Pieter Hintjens, author of Zero MQ

Anil Madhavapeddy, co-author of Real World OCaml

Pieter and Anil, should you be reading this post, I can confirm that we sold all the copies of your books – thank you for the marketing assistance during your talks.

I met Sarah Novotny, co-chair of OSCON for the last couple of years – unfortunately being at FOSDEM, we did not have a chance to talk apart from a very brief greeting and see you later. I was also extremely happy to meet Constantin Dabro who is the leader of the Burkina Faso Java User Group.

On Sunday evening, after packing 7 boxes of leftover books, somebody told me that I was the mother of FOSDEM, then I thought I am not the mother but I feel like great-grandmother or somebody who had run a couple of marathons in two days :))

There is no typo in the title of this post. It intentionally reads ‘&&’. && is a boolean operator used in many programming and scripting languages. Why would an artist care about a boolean operator? That’s correct: because this particular artist is also a geek. “What’s a geek?”, other artists might ask. A geek is someone interested in tech (programming, devices, electronics), but also interested in social interaction.

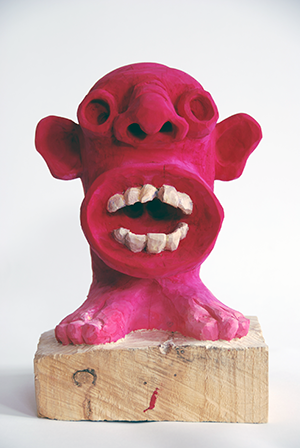

The artist part in the title refers to the fact that I paint and sculpt, and the geek part is about my interest in php and ZendFramework and a few other things one can program with and for the web (like 3D scenes). To make things worse, I also like to write. Why worse? You guessed right again: it leaves me craving for time.

Can I really be an artist and a geek (and a writer) at the same time? Or will any or both suffer from the fact that a day has only a bare twenty-four hours? That’s where the boolean operator comes into play. It’s up to you: if you think I’m really an artist and I’m also really a geek, then both operands (Artist and Geek) evaluate to true (as geeks tend to say) and the entire expression will also evaluate to true, the entire expression meaning ‘Artist && Geek’.

Leaving it up to others if I’m either of the two saves me a lot of headaches and hopefully some time. So although I definitely think of myself as Artist && Geek, I shall not be bothered whether this title is true or false.

With that out of the way, I should come to answering Josette’s question that led to this post: what’s it like to be both?

Being an Artist and a Geek

The fact that I seem to be always fighting for time is not the most interesting part. A lot of people do and some even go on training courses to learn how to manage their time. I don’t. Why not? Because losing some time every now and then is part of being an artist. In general, it is part of being creative. What happens when you lose time? You lose time either because you are not paying attention to it, or because someone else is wasting it for you. Wasting? Not paying attention? Let me look into those two a bit further.

Not paying attention

Not paying attention reminds me of my teacher in second grade. She shouted at me when I sat in her classroom daydreaming. Is daydreaming a waste of time? Daydreaming is what creative people do to get ideas. Nowadays, I do not get enough time to daydream, because I have a day job as a self-employed geek and also because I’m addicted to typing code and watch it come to life, so I tend to code and write about coding during as many evenings as I can steal from the remainders of my social life. Whenever I do get a little time to daydream, it tends to be on the drive to work when the sun is rising from the early morning fog:

It is dangerous to daydream in the car while driving, so the dream only lasts long enough to capture a picture (a few milliseconds are sufficient) that I can paint later, on one of the rare days that I can spend in my art studio. I see other artists develop really creative and great ideas and I can tell that they spent a lot of time dreaming. I’m not a violent person, but it’s better that my second grade teacher and I do not ever meet again.

Wasting time

Can time really be wasted? If in this busy life, you have to wait for your dentist for half an hour because he needs a little extra time to help a new customer, is your time wasted? What would the Artist do with that time? He would look at the other people waiting. He would imagine what kind of lives they live, maybe even speak to them to find out. He would draw a sketch of the bored and waiting and later turn it into a painting. But what does the Geek do? He is prepared for this kind of situation: he takes out his MacBook Air that he can carry everywhere because it weighs next to nothing. And he starts coding, because he’s an addict, but also because he knows that since there is no pressure, he might get a better idea than when facing a tight deadline at work. One could state that at this point the Geek really gets in the way of the Artist. On the other hand, the Artist will help the Geek to be creative, since coding is considered art or poetry by many. Thinking about it, the Geek should have remembered to bring his iPad as well so that the Artist could draw on it.

The Artist and the Geek helping each other

While the above may seem unfair in that the Geek gets in the way of the Artist and the Artist is helping the Geek at typing code poetry, the Geek is also helping the Artist by supporting him financially. The first thing people want to know about an artist is how he gets by money-wise. In my case, the Geek is allowing the Artist to do anything he likes, that is, as long as time permits (which is, like we saw earlier, not very long). But spending a lot of time on a work of art is not necessarily making it better. The conception and execution of a painting may germinate for months or even years, or a painting can be born from being thrown at the canvas in an outburst of creativity after pressure-cooking in his mind for a considerable amount of time. All of this can be done independently. There is no need to sell any of these artworks, although most of them are for sale and many will eventually find a new owner.

For the above painting I did four different small sketches in different types of paint, only to figure out what should be on it and what would better be left out. It took me four years to decide.

Today’s art

There is something about today’s art that makes me question it a lot if it is done in a traditional way. This includes my own art. The famous artists from the past are admired because they found the most powerful ways available in their era to express themselves, or to express an idea of general interest. They also found new ways of expression. Some great artists were entrepreneurs, some successful, some not so successful. I think you can compare Rubens to Stephen Spielberg. Both orchestrated large scenes, using the most powerful visualization techniques of their time. Both didn’t do this alone.

Many say that a true artist chooses the best materials to express his idea. This can be said of both Rubens and Spielberg. That’s where my first problem lies: I tend to like certain materials, while I do not focus much on ideas. I like traditional paint and wood. So I mostly paint using traditional painting techniques and I sculpt in wood. Wrong approach! Today’s most powerful material is the byte. What? Yes, the byte. The fact you’ve read this far proves the power of bytes. Bytes become even more powerful if you create games with them. Games, especially 3D games, deliver a total experience that take possession of their player. It’s the most powerful and immersive way to express ideas currently known to mankind.

Yet the old techniques have not died completely. When you write a book, the reader immerses himself in it. While this requires effort from the reader, the immersion can be complete. The same is true for paintings, but they shall not be judged from their reproductions (displayed on either a computer screen or in print). A reproduction of a painting does not allow for immersion. It is simply impossible, believe it or not. The immersive qualities of a painting are crafted by the painter by means of continuous deployment. A painter steps back and forth in front of his work to test the immersive qualities. These differ depending on the distance to the work and the way the light falls on the painting. These qualities are completely absent from any type of reproduction.

With people staring into their tablets and smartphones all day, less people have time to go out and see actual paintings, let alone immerse themselves in them. Research has proved that if they go to a museum to look at art at all, they look for 9,000 milliseconds on average (it hurts too much to write this number down expressed in seconds, or even hours, so I’ll leave that as an exercise to the reader). Therefore, I fear for the future of painting in the traditional sense of the word and I am glad that I also have some power over bytes: the most powerful raw material of the modern age.

Some encouraging thoughts

I think guaranteed ways to waste time exist. It is a waste of time to do repetitive work. You’ve done it before, it is not a new experience. It is detrimental to your creativity and brainpower. Repetitive work should be automated, either by machines or by coding.

Although bytes have a lot of power, nothing compares to recreating an atmosphere as he has experienced it in a landscape, in the opinion of the Artist, with his own hands and traditional paint. This requires vision, experience, speed, creativity and courage. His own judgement is the harshest he can get. Nothing compares to holding an idea in his hands and turning it around, knowing that he has shaped this out of wood that didn’t really want to be shaped: wood that resisted like mad, but had to give in to his insistent chiseling.

What about the other Perl frameworks, Dancer and Mojolicious? How do they compare to Catalyst?

Dancer’s big strength is making things quick and easy for smaller apps; you don’t have to think in terms of OO unless you want to and plugins generally shove a bunch of extra keywords into your namespace that are connected to global or per-request variables. Where Catalyst doesn’t have an exact opinion about a lot of the structure of your code but very definitely insists that you pick one and implement it, Dancer basically lets you do whatever you like and not really think too much about it.

That really isn’t meant as a criticism: somewhere along the line I picked up a commit bit to Dancer as well and they’ve achieved some really good things – providing something that’s as little conceptual overhead as possible for smaller apps, and something where there’s a very direct mapping between the concepts involved and what’s actually going to happen in terms of request dispatch, whereas Catalyst abstracts things more thoroughly, so there’s a trade-off there. I mean, I was saying before that empty methods with route annotations almost always end up getting some code in them eventually.If you get to 1.0 and most of those methods are still empty, you might’ve been able to write a lot less code or at least do a lot less thinking that turned out not to have been necessary if you’d used Dancer instead. Equally, I’ve seen Dancer codebases that have got complicated enough to turn into a gnarly, tangled mess and the developers are looking and thinking, “You know, maybe I was wrong about Catalyst being overkill…”

I love the accessibility of Dancer though, and the team are great guys. I’ve seen the catalyst community send people who’re clearly lost trying to scale the learning curve to use Dancer instead, and I’ve seen the Dancer community tell people they’re doing enough complicated things at once to go look at Catalyst insteadand hey, we both run on top of PSGI/Plack so you can have /admin served by Dancer and everything else by Catalyst … or the other way around … or whatever.

Meantime, Mojolicious is taking its trade-offs in a different dimension.Sebastian Riedel, the project founder, was also founder of Catalyst; he left the Catalyst project just before the start of, I think, the 5.70 release cycle, because we’d acquired a lot of users who’d bet the business on Catalyst’s stability at that point, and a lot of contributors who thought that was OK, and Sebastian got really, really frustrated at the effort involved in maintaining backwards compatibility.

So he went away and rethought everything, and Mojo has ended up having its own implementations of a lot of things, focused on where they think people’s needs in web development are going over the next few years. A company heavily using Mojo open sourced a real–time IRC web client recently, doing a lot of clever stuff, and Mojolicious helped them with that substantially. But the price they end up paying is that when you step outside the ecosystem it’s quite jarring, because the standards and conventions aren’t quite the same as the rest of modern Perl. Mojolicious has a very well-documented staged backcompat breakage policy which they stick to religiously, and for “move fast and break stuff” style application development, I think they’ve got the policy pretty much spot on and they’re reaping a lot of advantages from it

But for a system where you want to be able to ignore a section that users are happy with and then pick it up down the line when the state of the world changes and it needs extending (for example, for the people doing boring business systems where finding out 5 seconds sooner that somebody edited somethingwould be nice to have) but what really matters is that the end result is correct, then I’m not sure I’m quite so fond of the approach. I think if you were going to talk about a typical backend for each framework, you could say that Dancer’s would be straight SQL talking to MySQL, Catalyst’s would be some sort of ORM talking to PostgreSQL, and Mojolicious’ would be a client library of some sort talking to MongoDB. Everybody’s going to see some criticism of the other two implicit in each of what I said, but take it as a compliment to their favourite: if they don’t, that’s because my metaphor failed rather than anything else

I can’t recall a time when I’ve seen an app that was a reasonable example of its framework where I really thought that either of the other two would’ve worked out nearly as well for them... with the exception of a fairly small Catalyst app that, in spite of being, if anything, a bit small for Catalyst to make sense turned into a crawling horror when ported to Mojolicious – but then again, when I showed that to Sebastian and asked, “am I missing something here?”, the only reply I got was some incoherent screaming, the underlying meaning being, “WHAT HAVE THEY DONE TO MY POOR FRAMEWORK?! CANNOT UNSEE, CANNOT UNSEE!” So I think, as with Dancer, it’s a matter of there being more than one way to do it, and one or other of them is going to be more reasonable depending on the application.

What’s in Catalyst’s wish-list, in which direction is the project moving and do you think that someday Catalyst’s adoption will be so widespread that it will become the reason for restoring the P=Perl back to LAMP?

The basic goals at the moment are a mixture of adding convenience features for situations that are common enough now to warrant them but weren’t, say, five years ago; continuing to refactor the core to enable easier and cleaner extension; and to figure out a path forwards that lets us clean up the API to push new users onto the best paths while not punishing people that use older approaches.

As for putting the P back in LAMP? I’ve regarded it as standing for “Perl, PHP or Python” for as long as I can remember. It doesn’t seem to me that treating it as a zero sum game is actually useful: in the world of open source, crushing your enemies might be satisfying but encouraging them and then stealing all their best ideas seems like much more fun to me.

What are your thoughts on Perl 6 and given the opportunity, would you someday re-write Catalyst in it?

I’ve spent a fair amount of time and energy over the years making sure that the people thinking hard about language design for both the Perl5 and Perl6 languages talk to each other and share ideas and experiences reasonably often, but I’m perfectly comfortable with Perl5 as my primary production language for the moment, and so long as the people who actually know what they’re doing with this stuff are paying attention I don’t feel the need to that much.

One of the things I’m really hoping works out is the whole MoarVM plan, wherein Rakudo will end up with a solid virtual machine that was designed from the start to be able to embed libperl and thereby call back and forth between the languages. So if that plan comes off, then I don’t think you’d ever write Catalyst in Perl6 so much as you could write parts of Catalyst apps in Perl6 if you wanted to… and maybe one day there’d be something that uses features that are uniquely Perl6-like that turns out to be technologically more awesome. You can still write parts of those apps in Perl5 if it makes sense, but I don’t think looking at the two languages in the Perl family as some sort of competition is that useful. I much prefer a less dogmatic approach, similar to the saner of the people I know who are into various Lisp dialects.

So it’s more about experimenting in similar spaces and learning and sharing things – and while being a language family is often cited as a reason why Lisp never took over the world … Perl taking over the world got us Matt’s Script Archive and a generation of programmers who thought the language was called PERL and fit only for generating write-only line noise whereas being a language family seems to have pretty effectively given Lisp immortality, albeit a not-entirely-mainstream sort of immortality.

I think, over a long enough timeline, I could pretty much live with that (absent a singularity or something I’ll probably be dead in about the number of years that Lisp has existed), and I think if there is a singularity then programming languages afterwards won’t look anything like they do now… although, admittedly, I still wouldn’t be surprised if my favorite of whatever they do look like was designed by Larry Wall.

Nikos Vaggalis has a BSc in Computer Science and a MSc in Interactive Multimedia. He works as a Database Developer with Linux and Ingres, and programms in both Perl and C#. He is interested in anything related to the RDBMS and loves crafting complex SQL queries for generating reports. As a journalist, he writes articles, conducts interviews and reviews technical IT books

Does all that flexibility come at a price?

The key price is that while there are common ways to do things, you’re rarely going to find One True Way to solve any given problem. It’s more likely to be “here’s half a dozen perfectly reasonable ways, which one is best probably depends on what the rest of your code looks like”, plus while there’s generally not much integration specific code involved, everything else is a little more DIY than most frameworks seem to require.

I can put together a catalyst app that does something at least vaguely interesting in a couple hours, but doing the sort of 5 minute wow moment thing that intro screencasts and marketing copy seem to aim for just doesn’t happen, and often when people first approach catalyst they tend to get a bit overwhelmed by the various features and the way you can put them together.

There’s a reflex of “this is too much, I don’t need this!”. But then a fair percentage of them come back two or three years later, have another look and go “ah, I see why I want all these features now: I’d’ve written half as much code since I thought I didn’t need all Catalyst features”. Similarly the wow moment is usually three months or six months into a project, when you realise that adding features is still going quickly because the code’s naturally shaken out into a sensible structure

So, there’s quite a bit of learning, and it’s pretty easy for it to look like overkill if you haven’t already experienced the pain involved. It’s a lot like the use strict problem writ large – declaring variables with my inappropriate scopes rather than making it up as you go along is more thinking and more effort to begin with, so it’s not always easy to get across that it’s worth it until the prospective user has had blue daemons fly out of his nose a couple of times from mistakes a more structured approach would’ve avoided.

So, it’s flexibility at the expense of a steep learning curve, but apart from that, if I could compare Catalyst to Rails, I would say that Rails tries to be more like a shepherd guiding the herd the way it thinks is the right one or the way they should go, while Catalyst allows room to move and make your own decisions. Is that a valid interpretation ?

It seems to me that Rails is very much focused on having opinions, so there’s a single obvious answer for all the common cases. Where you choose not to use a chunk of the stack, whatever replaces it is similarly a different set of opinions, whereas Catalyst definitely focuses on apps that are going to end up large enough to have enough weird corners that you’re going to end up needing to take your own choices. So Rails is significantly better at making easy things as easy as possible but Catalyst seems to do better at making hard things reasonably natural if you’re willing to sit down and think about it.

I remember talking to a really smart Rails guy over beer at a conference (possibly in Italy) and the two things I remember the most were him saying “my customers’ business logic just isn’t that complicated and Rails makes it easy to get it out of the way so I can focus on the UI”, and when I talked about some of the complexities I was dealing with, his first response was, “wait, you had HOW many tables?”.

So while they share, at least very roughly, the same sort of view of MVC, they’re optimised very differently in terms of user affordances for developers working with them. It wasn’t so long back somebody I know who’s familiar with Perl and Ruby was talking to me about a new project. I ended up saying: “Build the proof of concept with rails, and then if the logic’s complicated enough to make you want to club people to death with a baby seal, point the DBIx::Class schema introspection tool at your database and switch to Catalyst at that point”.

But surely, like Rails, Catalyst offers functionality out of the box too. What tasks does Catalyst take care of for me and which ones require manual wiring?

There’s a huge ecosystem of plugins, extensions and so forth in both cases but there’s a stylistic difference involved. Let me talk about the database side of things a sec, because I’m less likely to get the Rails-side part completely wrong.

Every Rails tutorial I’ve ever seen begins “first, you write a migration script that creates your table” … and then once you’ve got the table, your class should just pick up the right attributes because of the columns in the database, and that’s … that’s how you start, unless you want to do something non-standard (which I’m sure plenty of people do, but it’s an active deviation from the default), whereas you start a catalyst app, and your first question is “do I even want to use a database here?”

Then, assuming you do, DBIx::Class is probably a default choice, but if you’ve got a stored procedure oriented database to interface to, you probably don’t need it, and for code that’s almost all insanely complex aggregates, objects really aren’t a huge win but let’s assume you’ve gone for DBIx::Class

Now you ask yourself “do I want Perl to own the database, or SQL?” In the former case you’ll write a bunch of DBIx::Class code representing your tables, and then tell it to create them in the database; in the latter you’ll create the tables yourself and then generate the DBIx::Class code from the database. There’s not exactly an opinion of which is best: generally, I find that if a single application owns the database then letting the DBIx::Class code generate the schema is ideal, but if you’ve got a database that already exists that a bunch of other apps talk to as well, you’re probably better having the schema managed some way between the teams for all those apps and generating the DBIx::Class code from a scratch database.

Both of those are pretty much first class approaches, and y’know, if your app owns the database but you’ve already got a way of versioning the schema that works, then I don’t see why I should stop you from doing that and generating the DBIC code anyway. So it’s not so much about whether manual wiring is required for a particular task or not but how much freedom you have to pick an approach to a task, and how many decisions does that freedom require before you know how to fire off the relevant code to set things up. I mean, whether you classify code as boilerplate or not depends on whether you ever foresee wanting to change it.

So when you first create a catalyst controller, you often end up with methods that participate in dispatch – have routing information attached to them – but are completely empty of code which tends to look a little bit odd, so you often get questions from newbies of “why do I need to have a method there when it doesn’t do anything?”, but then you look at this code again when you’re getting close to feature complete, and almost all of those methods have code in them now because that’s how the logic tends to fall out.

There’s two reasons why that’s actively a good thing: first, that because there was already a method there even if it was a no-op to begin with, the fact it’s a method is a big sign saying “it’s totally OK to put code here if it makes sense”, which is a nice reminder, and makes it quite natural to structure your code in a way that flow nicely and secondly, once you figure it out in total, any other approach would involve time to declare non-method route plus time to redeclare all routes that got logic as methods and if most of your methods end up with code in them then that means that overall, for reasonably complex stuff, the Catalyst style ends up being less typing than anything else would be. But again we’re consciously paying a little bit more in terms of upfront effort as you’re starting to enable maintainability down the road

It’s easy to forget that Catalyst is not just a way of building sites, but also a big, big project in software architecture/engineering terms, built with best practices in mind.

Well, it is and it isn’t: there’s quite a lot of code in there that’s actually there to support not best practices, but not forcing people to rewrite code until they’re adding features to it, since if you’ve got six years’ worth of development and a couple hundred tables’ worth of business model, “surprise! we deleted a bunch of features you were using!” isn’t that useful, even when those features only existed because our design sucks (in hindsight, at least).

I’d say, yeah, that things like Chained dispatch and the adaptor model and the support for roles and traits pretty much everywhere enables best practices as we currently understand them. But there’s also a strong commitment to only making backwards incompatible changes when we really have to, because the more of those we make, the less likely people are to upgrade to a version of Catalyst that makes it easy to write new code in a way that sucks less (or, at least, differently).

But there’s a strong sense in the ecosystem and in the way the community tends to do things of trying to make it possible to do things as elegantly as possible even with a definition of elegant that evolves over time. So you might wish that your code from, say, 2008, looked a lot more like the code you’re writing in 2013, but they can coexist just fine until there’s a big features push on the code from 2008 and then you refactor and modernise as you and we’ve always had a bias towards modernization, so things can be done as extensions, and prioritising making it more possible to do more things as extensions, than adding things into the core.

So, for example, metacpan.org is a catalyst app using elasticsearch as a backend and people are using assorted other non-relational things and getting on just fine … and back in 2006, the usual ORM switched from Class::DBI to DBIx::Class and it wasn’t a big deal (though DBIx::Class got featureful enough that people’s attempts to obsolete it have probably resulted in more psychiatric holds than they have CPAN releases) and a while back we swapped out our own Catalyst::Engine:: system for Plack code implementing the PSGI spec, and that wasn’t horribly painful and opened up a whole extra ecosystem (system for handling HTTP environment abstraction).

Even in companies conservative enough to be still running 5.8.x Perl, most of the time you still tend to find that they’ve updated the ecosystem to reasonably recent versions, so they’re sharing the same associated toolkits as the new build code in Greenfield projects. So we try and avoid ending up too out of date without breaking existing production code gratuitously, and nudge people towards more modern patterns of use and not interfere with people who love the bleeding edge, but not force that on the users we have who don’t either. So sometimes things take longer to land than people might like. There’s a lot of stuff to understand, but if you’re thinking in terms of core business technology rather than hacking something out that you’ll rewrite entirely in a year when Google buys you or whatever, I think it’s a pretty reasonable set of trade-offs.

In the forthcoming third and last part of the interview, we talk about the the other Perl frameworks Dancer and Mojolicious, in which direction is the project moving, and whether Perl 6 is a viable option for Web development

Nikos Vaggalis has a BSc in Computer Science and a MSc in Interactive Multimedia. He works as a Database Developer with Linux and Ingres, and programms in both Perl and C#. He is interested in anything related to the RDBMS and loves crafting complex SQL queries for generating reports. As a journalist, he writes articles, conducts interviews and reviews technical IT books

We talk to Matt S. Trout, technical team leader at consulting firm Shadowcat Systems Limited, creator of the DBIx::Class ORM and of many other CPAN modules, and of course co-maintainer of the Catalyst web framework. These are some of his activities, but for this interview we are interested in Matt’s work with Catalyst.

We talk to Matt S. Trout, technical team leader at consulting firm Shadowcat Systems Limited, creator of the DBIx::Class ORM and of many other CPAN modules, and of course co-maintainer of the Catalyst web framework. These are some of his activities, but for this interview we are interested in Matt’s work with Catalyst.

Our discussion turned out not to be just about Catalyst though. While discussing the virtues of the framework, we learned, in Matt’s own colourful language, what makes other popular web frameworks tick, managed to bring the consultant out of him who shared invaluable thoughts on architecting software as well as on the possibility of Perl 6 someday replacing Perl 5 for web development.

We concluded that there’s no framework that wins by knockout, but that the game’s winner will be decided on points, points given by the final judge, your needs.

So, Matt, let’s start with the basics. Catalyst is a MVC framework. What is the MVC pattern and how does Catalyst implement it?

The fun part about MVC is that if you go through a dozen pages about it on Google you’ll end up with at least ten different definitions. The two that are probably most worthwhile paying attention to are the original and the Rails definitions.

The original concept of MVC came out of the Xerox PARC work and was invented for Smalltalk GUIs. It posits a model which is basically data that you’re live-manipulating, a view which is responsible for rendering that, and a controller which accepts user actions.

The key thing about it was that the view knew about the model, but nothing else. The controller knew about the model and the view, while the model was treated like a mushroom – kept in the dark; the view/controller classes handled changes to the model by using the observer pattern, so an event got fired when they changed (you’ll find that angular.js, for example, works on pretty much this basis – it’s very much a direct-UI-side pattern).

Now, what Rails calls MVC (and, pretty much, Catalyst also does) is a sort of attempt to squash that into the server side at which point your view is basically the sum of the templating system you’re using plus the browser’s rendering engine, and your controller is the sum of the browser’s dispatch of links and forms and the code that handles that server side. So, server side, you end up with the controller being the receiver for the HTTP request, which picks some model data and puts it in … usually some sort of unstructured bag. In Catalyst we have a hash attached to the request context called the stash. In Rails they use the controller’s instance attributes and then you hand that unstructured bag of model objects off to a template, which then renders it – this is your server-side view.

So, the request cycle for a traditional HTML rendering Catalyst app is:

- the request comes in

- the appropriate controller is selected

- Catalyst calls that the controller code, performs any required alterations to the model

- then tells the view to render a template name with a set of data

The fun part, of course, is that for things like REST APIs you tend to think in terms of serialize/deserialize rather than event->GUI change, so at that point the controller basically becomes “the request handler” and the view part becomes pretty much vestigial, because the work to translate that data into something to display to the user is done elsewhere, usually client side JavaScript (well, assuming the client is a user facing app at all, anyway).

So, in practice, a lot of stuff isn’t exactly MVC … but there’ve been so many variants and reinterpretations of the pattern over the years that above all it means to “keep the interaction flow, the business logic, and the display separate … somehow” which is clearly a good thing, and idiomatic catalyst code tends to do so. The usual rule of thumb is “if this logic could make sense in a different UI (e.g. a CLI script or a cron job), then it probably belongs inside the domain code that your web app regards as its model”; plus “keep the templates simple, and keep their interaction with the model read–only – anything clever or mutating probably belongs in the controller”.

So you basically drive to push anything non-cosmetic out of the view, and then anything non-current-UI-specific out of the controller and the end result is at least approximately MVC for some of the definitions and ends up being decently maintainable

Can you swap template engines for the view as well as, at the backend, swap DBMS’s for the model?

Access to the models and views is built atop a fairly simple IOC system – inversion of control – so basically Catalyst loads and makes available whatever models and views are provided, and then the controller will ask Catalyst for the objects it needs. So the key thing is that a single view is responsible for a view onto the application; the templating engine is an implementation detail, in effect, and there are a bunch of view base classes that mean you don’t have to worry about that, but if you had an app with a main UI and an admin UI, you might decide to keep both those UIs within the same Catalyst application and have two views that use the same templating system but a completely different set of templates/HTML style/etc.

In terms of models, if you need support from your web framework to swap database backends, you’re doing something horribly wrong. The idea is that your domain model code is just something that exposes methods that the rest of the code uses – normally it doesn’t even live in the Catalyst model/ directory. In there are adapter classes that basically bolt external code into your application.

Because the domain code shouldn’t be web-specific in the first place you have some slightly more specialised adapters – notably Catalyst::model::DBIC::Schema which makes it easier to do a bunch of clever things involving DBIx::Class – but the DBIx::Class code, which is what talks to your database for you, is outside the scope of the Catalyst app itself.

The web application should be designed as an interface to the domain model which not only makes things a lot cleaner, but means that you can test your domain model code without needing to involve Catalyst at all. Running a full HTTP request cycle just to see if a web-independent calculation is implemented correctly is a waste of time, money and perfectly good electricity!

So, Catalyst isn’t so much DBMS-independent as domain-implementation-agnostic. There are catalyst apps that don’t even have a database, that manage, for example, LDAP trees, or serve files from disk (e.g. the app for our advent calendar). The model/view instantiation stuff is useful, but the crucial advantage is cultural. It’s not so much about explicitly building for pluggability as refusing to impose requirements on the domain code, at which point you don’t actually need to implement anything specific to be able to plug in pretty much whatever code is most appropriate. Sometimes opinion is really useful. Opinion about somebody else’s business logic, on the other hand, should in my experience be left to the domain experts rather than the web architect.

Catalyst also has a RESTfull interface. How is the URI mapped to an action?

The URI mapping works the same way it always does. Basically, you have methods that are each responsible for a chunk of the URL, so for a URL like /domain/example.com/user/bob you’d have basically a method per path element: the first one sets up any domain-generic stuff and the base collection, the second pulls the domain object out of the collection, then you go from there to a collection of users for that domain and pull the specific user. Catalyst’s chained dispatch system is basically entirely oriented around the URL space, drilling down through representations/entities anyway which is a key thing to do to achieve REST, but basically a good idea in terms of URL design anyway plus, because the core stuff is all about path matching, it becomes pretty natural to handle HTTP methods last. So there’s Catalyst::Action::REST and the core method matching stuff that makes it cleaner to do that, but basically they both just save you writing:

if (<GET request>) { ... } elsif (<POST request>) { ... } etc.

Of course you can do RESTful straight HTTP+HTML UIs, although personally I’ve found that style a little contrived in places. For APIs, though, the approach really shines but basically API code is – well, it’s going to be using a serializer/deserializer pair (usually JSON these days) instead of form parsing and a view – but apart from that, the writing of the logic stuff isn’t hugely different. RESTful isn’t really about a specific interface, it’s about how you use the capabilities available. But the URI mapping and request dispatch cycle is a very rich set of capabilities – and allow a bunch of places to fiddle with dispatch during the matching process. Catalyst::Action::REST basically hijacks the part where Catalyst would normally call a method and calls a method based on the HTTP method instead; so, say, instead of user you’d have user_GET called. There’s also Catalyst::Controller::DBIC::API which can provide a fairly complete REST-style JSON API onto your DBIx::Class object graph.

So again it’s not so much that we have specific support for something, but that the features provide mechanism and then the policy/patterns you implement using those are enabled rather than dictated by the tools. I think the point I’m trying to make is that REST is about methods as verbs and about entities as first class things so it implies good URL design … but you can do good URL design and not do the rest of REST, it’s just that they caught on about the same time.

What about plugging CPAN modules in? I understand that this is another showcase of Catalyst’s extensibility. Can any module be used, or must they adhere to a public interface of some sort?

There’s very little interface; for most classes, either your own or from CPAN, Catalyst::model::Adaptor can do the trick. There are three versions of that, which are:

- call new once, during startup, and hang onto the object

- call new no more than once per request, keeping the same object for the rest of the request once it’s been asked for

- call new every time somebody asks for the object

The first one is probably most common, but it’s often nice to use the second approach so that your model can have a first class understanding of, for example, which user is currently logged in, if any, so that manages the lifecycle for you. Anything with a new method is going to work, which means any and all object-oriented stuff written according to convention in at least the past 10 years or so.

For anything else you break out Moose/Moo, and write yourself a quick normal class that wraps whatever crazy thing you’re using and now you’re back to it being easy (and you’ll probably find that class is more pleasant to use in all your code, anyway). Really, any attempt at automating the remaining cases would probably be more code to configure for whatever use-case you have than to just write the code to do it.

For example, sometimes you want a component that’s instantiated once, but then specialises itself as requested. A useful example would be “I want to keep the database connection persistent, but still have a concept of a current user to use to enforce restrictions on queries”. So there’s a role called Catalyst::Component::InstancePerContext that provides that – instead of using the Adaptor’s per-request version, you use a normal adaptor, and use that role in the class it constructs and then that object will get a method called on it once per request, which can return the final model object to be used by the controller code. I’ve probably expended more characters describing it than any given implementation takes, because it’s really just the implementation of a couple of short methods and besides that, the most common case for that is DBIx::Class. There’s also a PerRequestSchema role shipped with Catalyst::model::DBIC::Schema (which is basically a DBIx::Class-specialised adaptor, remember) that reduces it to something like:

sub _build_per_request_schema {

my ($self, $c) = @_;

$self->schema->restrict_with_object($c->user);

}

… but again the goal isn’t so much to have lots of full-featured integration code, but to minimise the need to write integration code in the first place.

In the forthcoming second part of the interview, we talk about the flexibility of Catalyst, its learning curve, Ruby on Rails, and the framework in Software Enginnering terms

Nikos Vaggalis has a BSc in Computer Science and a MSc in Interactive Multimedia. He works as a Database Developer with Linux and Ingres, and programms in both Perl and C#. He is interested in anything related to the RDBMS and loves crafting complex SQL queries for generating reports. As a journalist, he writes articles, conducts interviews and reviews technical IT books

Git-ing Out Of Trouble

Git is a popular and powerful tool for managing source code, documentation, and really anything else made of text that you’d like to keep track of. With great power comes quite a lot of complexity however, and it can be easy to get into a tangle using this tool. With that in mind, I thought I’d share some tips for how to “undo” with git.

The closest thing to an undo command in git is git reset. You can undo various levels of thing, right up to throwing away changes that are already in your history. Let’s take a look at some examples, in order of severity.

Git Reset

Git reset without any additional arguments (technically it defaults to git reset –mixed) will simply unstage your changes. The changes will still be there, the files won’t change, but the changes you had already staged for your next commit will no longer be staged. Instead, you’ll see locally modified files. This is useful if you realise that you need to commit only part of the local modifications; git reset lets you unstage everything without losing changes, and then stage the ones you want.

Git Reset –Hard

Using the –hard switch is more destructive. This will discard all changes since the last commit, regardless of whether they were staged or not. It’s relatively difficult to lose work in git, but this is an excellent way of achieving just that! It’s very useful though when you realise you’ve gone off on a tangent or find yourself in a dead end, as git reset –hard will just put you back to where you were when you last committed. Personally I find it helpful to commit before going for lunch, as immediately afterwards seems to be my peak time for tangents and I can then easily rescue myself.

Git Reset –Hard [Revision]

Using –hard with a specific SHA1 will throw away everything in your working copy including staging area, and the commits since the one you name here. This is great if you’re regretting something, or have committed to the wrong branch (make a new branch from here, then use this technique on the existing branch to remove your accidental commits. I do this one a lot too). Use with caution though, if you have already pushed your branch to somewhere else, your next push will need to use the -f switch to force the push – and if anyone has pulled your changes, they are very likely to have problems so this isn’t a recommended approach for already-pushed changes.

Hopefully there are some tips there that will help you to get out of trouble in the unlikely event that you need them. When things go wrong, stay calm and remember that it happens to the best of us!

Lorna Jane Mitchell is a web development consultant and trainer from Leeds in the UK, specialising in open source technologies, data-related problems, and APIs. She is also an open source project lead, regular conference speaker, prolific blogger, and author of PHP Web Services, published by O’Reilly.

Do you want to know more about Git?

Lorna will be giving a full day tutorial – ‘Git for Development Teams’ on Thursday 6th February 2014 in London. This tutorial is organized by FLOSS UK and O’Reilly UK Ltd. Click here for further details. Please note that the early bird rates are available until January 14th.

Best Wishes and Happy Holiday to you!

Erlang is now over 25 year old. I’ve been involved with Erlang from the very start, and seen it grow from an idea into a fully-fledged programming language with a large number of users.

Erlang is now over 25 year old. I’ve been involved with Erlang from the very start, and seen it grow from an idea into a fully-fledged programming language with a large number of users.

I wrote the first Erlang compiler, taught the first Erlang course, and with my colleagues wrote the first Erlang book. I started one of the first successful Erlang companies and have been involved with all stages of the development of the language and its applications.

In 2007 I wrote Programming Erlang (Pragmatic Bookshelf) – it had been 14 years since the publication of Concurrent Programming in Erlang (Prentice Hall, 1993) – and our users were crying out for a new book. So in 2007 I grit my teeth and started writing. I had the good fortune to have Dave Thomas as my editor and he taught me a lot about writing. The first edition was pretty ambitious, I wanted to describe every part of the language and the major libraries, with example code and show real-world examples that actually did things. So the book contained runnable code for things like a SHOUTcast server so you could stream music to devices and a full text indexing system.

The first edition of Programming Erlang spurred a flurry of activity – the book sold well. It was published through the Pragmatic Press Beta publishing process. The beta publishing process is great – authors get immediate feedback from their readers. The readers can download a PDF of the unfinished book and start reading and commenting on the text. Since the book is deliberately unfinished they can influence the remainder of the book. Books are published as betas when they are about 70% complete.

On day one over 800 people bought the book, and on day two there were about a thousand comments in the errata page of the book. How could there be so many errors? My five hundred page book seemed to have about 4 comments per page. This came as a total shock. Dave and I slaved away, fixing the errata. If I’d known I’d have taken a two week holiday when the book went live.

A couple of months after the initial PDF version of the book, the final version was ready and we started shipping the paper version.

Then a strange thing happened – The Pragmatic Bookshelf (known as the Prags) had published an Erlang book and word on the street was that it was selling well. In no time at all I began hearing rumours, O’Reilly was on the prowl looking for authors – many of my friends were contacted to see if they were interested in writing Erlang books.

This is really weird, when you want to write a book you can’t find a publisher. But when an established publisher wants to publish a book on a particular topic it can’t find authors.

Here’s the time line since 2007

* 2007 – Programming Erlang – Armstrong – (Pragmatic Bookshelf)

* 2009 – ERLANG Programming – Cesarini and Thompson – (O’Reilly)

* 2010 – Erlang and OTP in Action – Logan, Merritt and Carlsson – (Manning)

* 2013 – Learn You Some Erlang for Great Good – Hebert – (No Starch Press)

* 2013 – Introducing Erlang: Getting Started in Functional Programming – St. Laurent (O’Reilly)

* 2013 – Programming Erlang – 2nd edition- Armstrong – (Pragmatic Bookshelf)

Erlang was getting some love so languages like Haskell needed to compete – Real World Haskell by Bryan O’Sullivan, John Goerzen and Don Stewart was published in 2008. This was followed by Learn You a Haskell for Great Good by Miran Lipovaca (2011).

My Erlang book seemed to break the ice. O’Reilly followed with Erlang Programming and Real World Haskell – which inspired No Starch Press and Learn You a Haskell for Great Good which inspired Learn You Some Erlang for Great Good and the wheel started to spin.

Fast Forward to 2013

I was contacted by the Prags: did my book want a refresh? What’s a refresh? The 2007 book was getting a little dated. Core Erlang had changed a bit, but the libraries had changed a lot, and the user base had grown. But also, and significantly for the 2nd edition, there were now four other books on the market.

My goals in the 1st edition had been describe everything and document everything that is undocumented. I wanted a book that was complete in its coverage and I wanted a book for beginners as well as advanced users.

Now of course this is impossible. A book for beginners will have a lot of explanations that the advanced user will not want to read. Text for advanced users will be difficult for beginners to understand, or worse, impossible to understand.

When I started work on the 2nd edition I thought, “All I’ll have to do is piff up the examples and make sure everything works properly.” I planned to drop some rather boring appendices, drop the odd chapter and add a new chapter on the type system… so I thought.

Well, it didn’t turn out like that. My editor, the ever helpful Susanna Pfalzer, probably knew that, but wasn’t letting on.

In the event I wrote 7 new chapters, dropped some rather boring appendices and dropped some old chapters.

The biggest difference in the 2nd edition was redefining the target audience. Remember I said that the first edition was intended for advanced and beginning users? Well, now there were four competing books on the market. Fred Hebert’s book was doing a great job for the first-time users, with beautifully hand-drawn cartoons to boot. Francesco and Simon’s book was doing a great job with OTP, so now I could refocus the book and concentrate on a particular band of users.

But who? In writing the 2nd edition we spent a lot of time focusing on our target audience. When the first seven chapters were ready we sent the chapters to 14 reviewers. There were 3 super advanced users – the guys who know everything about Erlang. What they don’t know about Erlang could be engraved on the back of a tea-leaf. We also took four total beginners – guys who know how to program in Java but knew no Erlang – and the rest were middling guys: they’d been hacking Erlang for a year or so but were still learning. We threw the book at these guys to see what would happen.

Surprise number one: some of the true beginners didn’t understand what I’d written – some of the ideas were just “too strange”. I was amazed – goodness gracious me, when you’ve lived, breathed, dreamt and slept Erlang for twenty-five years and you know Erlang’s aunty and grandmother, you take things for granted.

So I threw away the text that these guys didn’t understand and started again. One of my reviewers (a complete beginner) was having problems, – I redid the text, they read it again, they still didn’t understand – “What are these guys, idiots or something? I’m busting a gut explaining everything and they still don’t understand!” And so I threw the text away (again) re-wrote it and sent them the third draft.

Bingo! They understood! Happy days are here again! Sometimes new ideas are just “too strange” to grasp. But by now I was getting a feeling for how much explanation I had to add: it was about 30% more than I thought, but what the heck, if you’ve written a book you don’t want the people who’ve bought the damn thing to not read it because it’s too difficult.

I also had Bruce Tate advising me – Bruce wrote Learn 600 Languages in 10 Minutes Flat (officially known as Seven Languages in Seven Weeks). Bruce is a great guy who does a mean Texas accent if you feed him beer and ask nicely. Bruce can teach any programming language to anybody in ten seconds flat, so he’s a great guy to have reviewing your books.

What about the advanced guys? My book was 30% longer and was aimed at converting Java programmers who have seen the light, who wish to renounce their evil ways and convert to the joys of Erlang programming, but what about the advanced guys?

Screw the advanced guys – they wouldn’t even buy the book because they know it all anyway. So I killed my babies and threw out a lot of advanced material that nobody ever reads. My goal is to put the omitted advanced material on a website.

I also got a great tip from Francesco Cesarini: “They like exercises.” So I added exercises at the end of virtually every chapter.

So now there is no excuse for not holding an Erlang programming course: there are exercises at the end of every chapter!

Joe Armstrong, author of Programming Erlang, is one of the creators of Erlang. He has a Ph.D. in computer science from the Royal Institute of Technology in Stockholm, Sweden and is an expert on the construction of fault-tolerant systems. He has worked in industry, as an entrepreneur, and as a researcher for more than 35 years.